by Brian Mackay

Geovis Class Project @RyersonGeo, SA8905, Fall 2017

The concept for this project stems from the popularity of phone apps and computer gaming. It attempts to merge game creation software, graphic art and open source geographic data. A flight simulator was created using Unity 3D to virtually explore a natural landscape model created from Light Detection and Ranging (LiDAR) data collected from the US Geological Survey (USGS).

The first step is to define the study area, which is a square mile section of Santa Cruz Island off the coast of California. This area was selected for the dramatic elevation changes, naturalness and rich cultural history. Once the study area is defined, the LiDAR data can be collected from the USGS Earth Explorer in an .LAS file format.

After data is collected, ArcMap is used to create a raster image before use in Unity 3D. The .LAS file was imported into ArcMap in order to create the elevation classes. This was done by using the statistics tab in the property manager of the .LAS file in ArcCatalog and clicking the calculate statistics button. Once generated an elevation map is displayed using several elevation classes. The next step is to convert the image to a raster using the .LAS dataset to raster conversion tool in ArcToolbox. This creates a raster image that must then be turned into a .RAW file format using Photoshop before it is compatible with Unity.

The data is now ready for use in Unity. Unity 3D Personal (free version) was used to create the remainder of the project. The first step is to import the .RAW file after opening Unity 3D. Click the GameObject tab → 3D Object → Terrain, then click on the settings button in the inspector window, scroll down, click import raw and select your file.

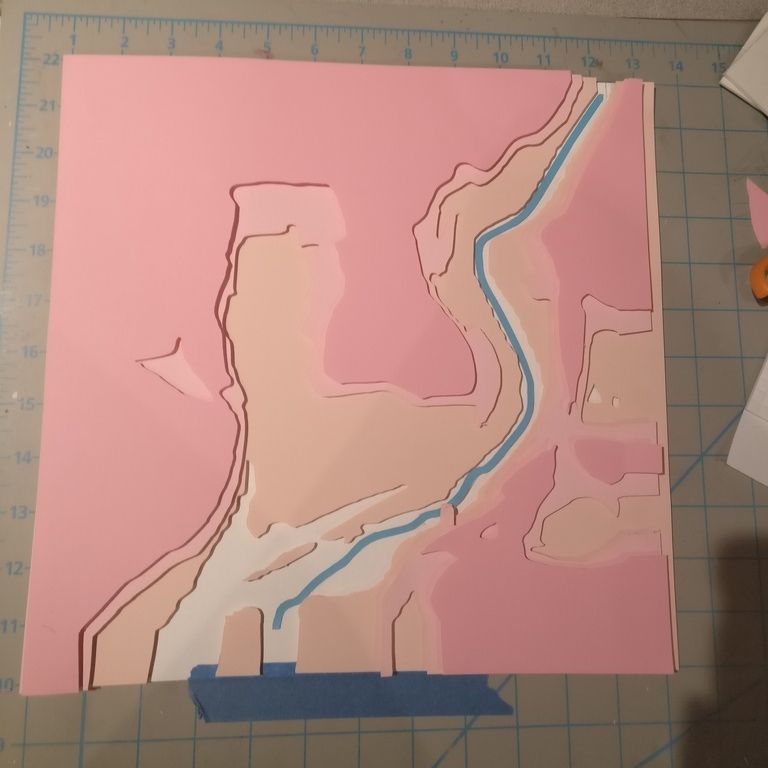

Next, define the area and height parameters to scale the terrain. The USGS data was in imperial units so this had to be converted to meters, which is used by Unity. The Length and width after conversion were set as 1609m (1 sq mile) and the height was set as 276m (906ft), which was taken from ArcMap and the .LAS file elevation classes (seen right and below). Once these parameters are set you can use your graphic art skills to edit the terrain.

Editing the terrain starts with the inspector window (seen below). The terrain was first smoothed to give it a more natural appearance rather than harsh, raw edges. Different textures and brushes were used to edit the terrain to create the most accurate representation of the natural landscape. In order to replicate the natural landscape, the satellite map from Google was used as a reference for colours, textures and brush/tree cover.

This is a tedious process and you can spend hours editing the terrain using these tools. The landscape is almost finished, but it needs a sky. This is added by activating the Main Camera in the hierarchy window then going to the inspector window and clicking Add Component → Rendering → Skybox. The landscape is complete and it is time to build the flight simulator.

To build the plane you must create each of its parts individually by GameObject → 3D Object → Cube. Arrange and scale each of the planes parts using the inspector window. The final step is to drag and drop the individual airplane parts into a parent object by first clicking GameObject → Create Empty. This is necessary so the C# coding applies to the whole airplane and not just an individual part. Finally, a chase camera has to be attached to the plane in order for the movement to be followed. You can use the inspector window to set the coordinates of the camera identical to the plane and then offsetting the camera back and above the plane to a viewing angle you prefer.

C# coding was the final step of this project and it was used to code the airplane controls as well as the reset game button. To add a C# script to an object, click on the asset in the hierarchy you want to code and select Add Component → New Script. The C# script code below was used to control the airplane.

Parameters for speed and functionality of the airplane were set as well as the operations for the chase camera. Finally, a reset button was programmed using another C# script as seen below.

The flight simulator prototype is almost complete. The last thing is inserting music and game testing. To insert a music file go to GameObject → Create Empty, then in the inspector window click Add Component → Audio → Audio Source and select the file. Now it’s time to test the flight simulator by clicking the game tab at the top of the viewer and pressing play.

Once testing is complete, it’s ready for export/publishing. Click File → Build Settings then select the type of game you want to create (in this case WebGL), then click Build and Run and upload the files to the suitable web host. The game is now complete.. Try it here!

There are a few limitations to using Unity 3D with geographic data. The first is the scaling of objects, such as the airplane and trees. This was problematic because the airplane was only about 5m long and 5m wide, which makes the scale of other objects appear overly large. The second limitation is the terrain editor and visual art component. Without previous experience using Unity 3D or having any graphic arts background it was extremely time consuming to replicate a natural landscape virtually. The selection of materials available for the terrain were limited to a few grasses, rocks, sand and trees in the Personal version. Other limitations include the user’s skills related to programming, particularly the unsuccessfully programmed colliders, which create invisible barriers to limit the airplane from flying off the landscape. Despite these limitations Unity 3D appears to provide the user with an endless creative canvas for game creation, landscape modeling, alteration and conceptual design, which are often very limited when using other GIS related software.

Retrieved from:

Retrieved from:

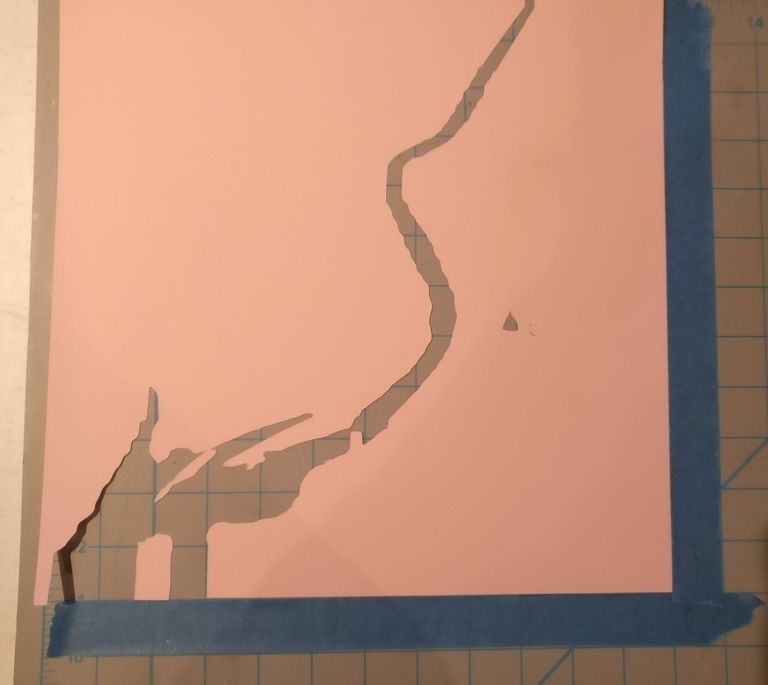

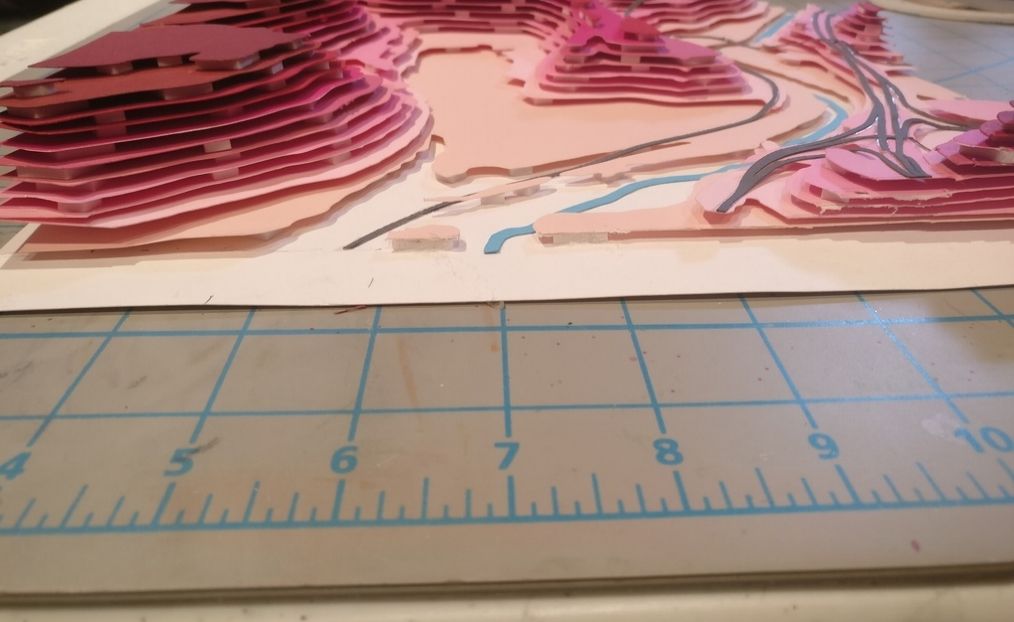

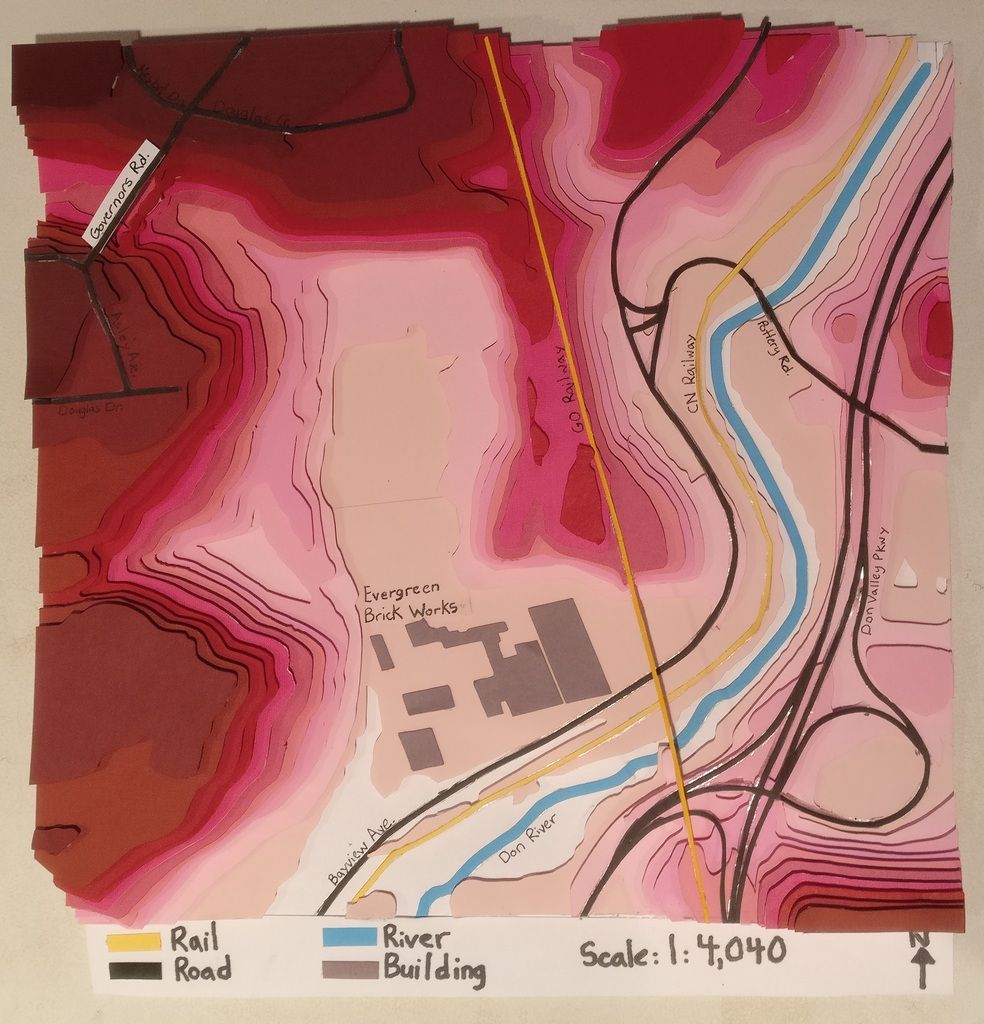

The front and back sides of the cube are shown in the photos to the left and below.

The front and back sides of the cube are shown in the photos to the left and below.

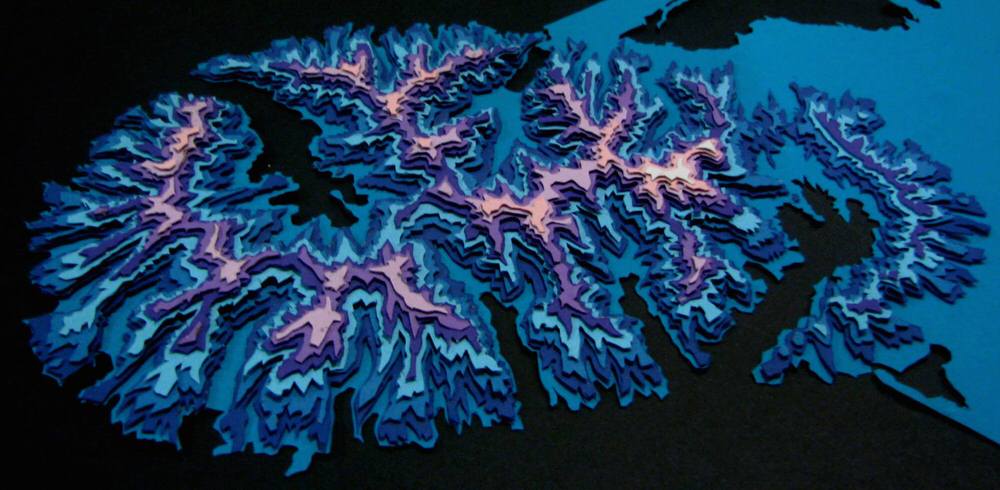

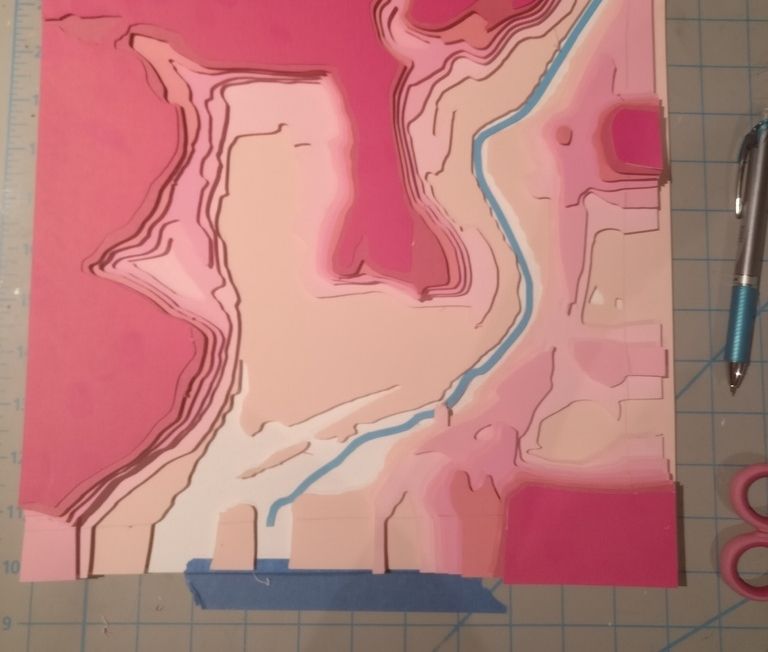

r each layer and the sides of the box were cut out, the boards were then ordered in chronological order. The boards were then painted in various shades of blue, as shown in the photo to the left. The corresponding year was then written onto the model board with either silver or black permanent marker (whichever color was more visible on the painted board).

r each layer and the sides of the box were cut out, the boards were then ordered in chronological order. The boards were then painted in various shades of blue, as shown in the photo to the left. The corresponding year was then written onto the model board with either silver or black permanent marker (whichever color was more visible on the painted board). The key to this step was ensuring that each of the layers was put in chronological order, and that each layer was the same distance apart. Ensuring that each layer was the same distance apart (1.25 inches to be exact) allowed the model to accurately depict the shrinking of the ice cap.

The key to this step was ensuring that each of the layers was put in chronological order, and that each layer was the same distance apart. Ensuring that each layer was the same distance apart (1.25 inches to be exact) allowed the model to accurately depict the shrinking of the ice cap. to appear as if an individual opened the top layer to look at the depiction of the shrinking polar ice cap through the 3D model, as shown in the photo below. The final dimensions of the 3D Paper model cube project are 8” x 10” x 10”.

to appear as if an individual opened the top layer to look at the depiction of the shrinking polar ice cap through the 3D model, as shown in the photo below. The final dimensions of the 3D Paper model cube project are 8” x 10” x 10”.