By Samantha Perry

Geovis Project Assignment @RyersonGeo, SA8905, Fall 2018

My goal was to create an animated time-series map using CARTO to visualize the spread of invasive species across Ontario. In Ontario there are dozens of invasive species posing a threat to the health of our lakes, rivers, and forests. These intruding species can spread quickly due to the absence of natural predators, often damaging native species and ecosystems, and resulting in negative effects on the economy and human health. Mapping the spread of these invasive species is beneficial for showing the extent of the affected areas which can potentially be used for research and remediation purposes, as well as awareness for the ongoing issue. For this project, five of the most problematic or wide-spread invasive species were included in an animated-interactive map to show their spatial and temporal distribution.

The final animated-interactive map can be found at: https://perrys14.carto.com/builder/7785166c-d0cf-41ac-8441-602f224b1ae8/embed

Data

- The first dataset used was collected from the Ontario Ministry of Natural Resources and Forestry and contained information on invasive species observed in the province from 1982 to 2012. The data was provided as a shapefile, with polygons representing the affected areas.

- The second dataset was downloaded from the Early Detection & Distribution Mapping System (EDDMapS) Ontario website. The dataset included information about invasive species identified between 2010 and 2018. I obtained this dataset to supplement the Ontario Ministry dataset in order to provide a more up-to-date distribution of the species.

Software

CARTO is a location-intelligence based website that offers easy to use mapping and analysis software, allowing you to create visually appealing maps and discover key insights from location data. Using CARTO, I was able to create an animated-interactive map displaying the invasive species data. CARTO’s Time-Series Widget can be used to display large numbers of points over time. This feature requires a map layer containing point geometries with a timestamp (date), which is included in the data collected for the invasive species.

CARTO also offers an interactive feature to their maps, allowing users control some aspects of how they want to view the data. The Time-Series Widget includes animation controls such as play, stop, and pause to view a selected range of time. In addition, a Layer Selector can be added to the map so the user is able to select which layer(s) they wish to view.

Limitations

In order to create the map, I created a free student account with CARTO. Limitations associated with a free student account include a limit on the amount of data that can be stored, as well as a maximum of 8 layers per map. This limits the amount of invasive species that can be mapped.

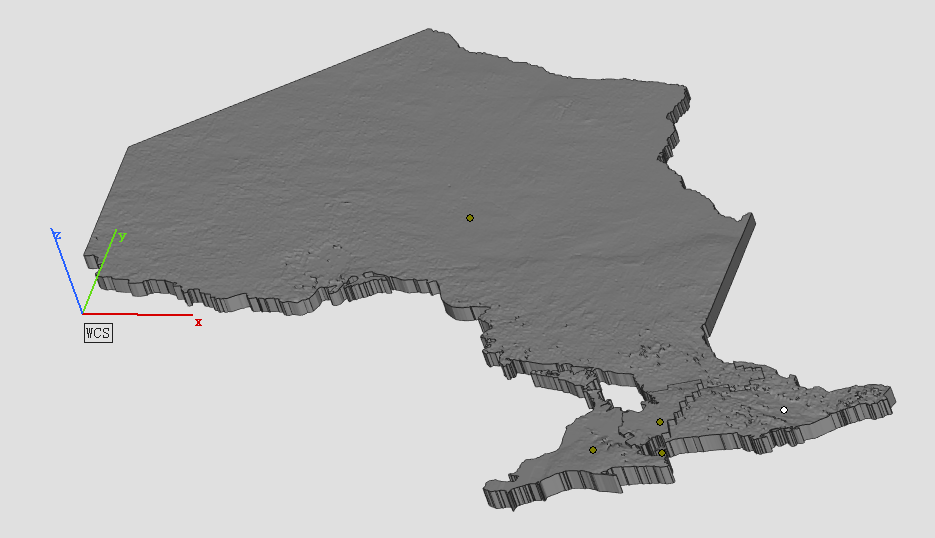

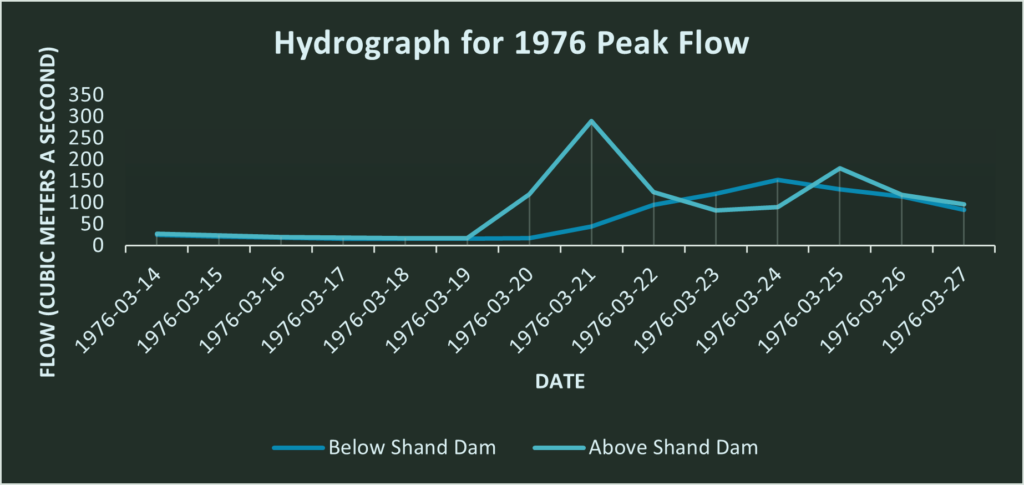

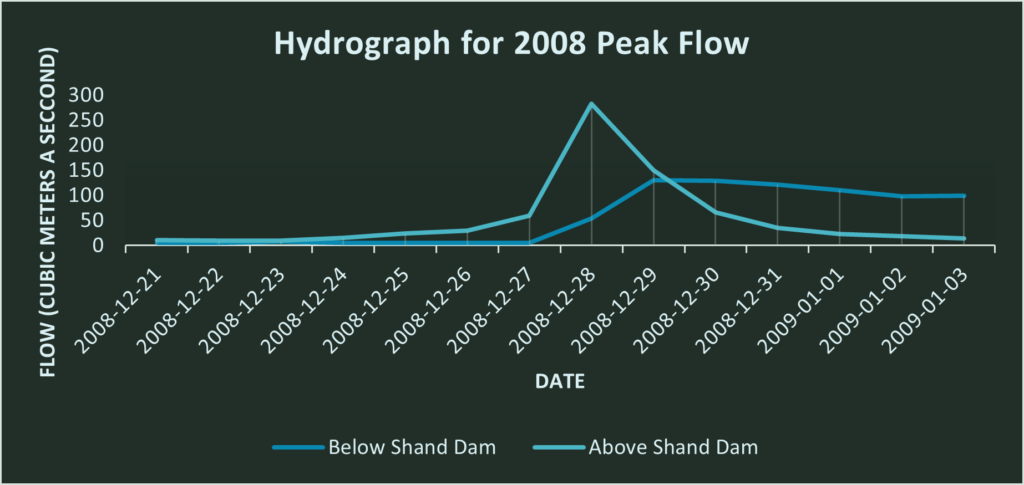

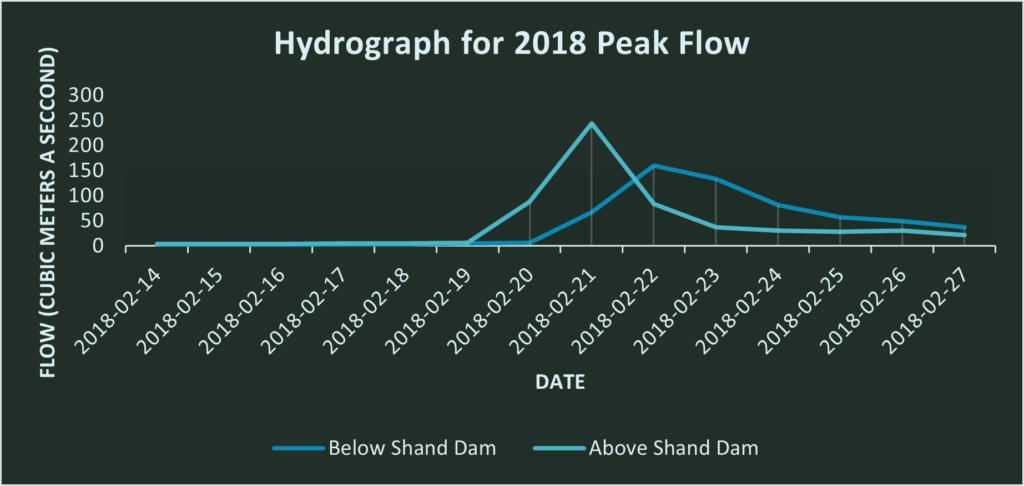

Additionally, only one Time-Series Widget can be included per map, meaning that I could not include a time-series animation for each species individually, as I originally intended to. Instead, I had to create one time-series animation layer that included all five of the species. Because this layer included thousands of points, the map looks dark and cluttered when zoomed out to the full extent of the province (Figure 1). However, when zoomed in to specific areas of the province, the points do not overlap as much and the overall animation looks cleaner.

Another limitation to consider is that not all the species’ ranges start at the same time. As can be seen in Figure 1 below, the time slider on the map shows that there is a large increase in species observations around 2004. While it is possible that this could simply be due to an increase in observations around that time, it is likely because some of the species’ ranges begin at that time.

Figure 1. Layer showing all five invasive species’ ranges.

Tutorial

Step 1: Downloading and reviewing the data

The Ontario Ministry of Natural Resources and Forestry data was downloaded as a polygon shapefile using Scholars GeoPortal, while the EDDMapS Ontario dataset was downloaded as a CSV file from their website.

Step 2: Selection of species to map

Since the datasets included dozens of different invasive species in the datasets, it was necessary to select a smaller number of species to map. Determining which species to include involved some brief research on the topic, identifying which species are most prevalent and problematic in the province. The five species selected were the Eurasian Water-Milfoil, Purple Loosestrife, Round Goby, Spiny Water Flea, and Zebra Mussel.

Step 3: Preparing the data for upload to CARTO

Since the time-series animation in CARTO is only available for point data, I had to convert the Ontario Ministry polygon data to points. To do this I used ArcMap’s “Feature to Point” tool which created a new point layer from the polygon centroids. I then used the “Add XY Coordinates” tool to get the latitude and longitude of each point. Finally, I used the “Table to Excel” conversion tool to export the layer’s attribute table as an excel file. This provided me with a table with all invasive species point data collected by the Ontario Ministry that could be uploaded to CARTO.

Next, I created a table that included the information for the five selected species from both sources. I selected only the necessary columns to include in the new table, including; Species Name, Observation Date, Year, Latitude, Longitude, and Observation Source. This combined table was then saved as an excel file to be uploaded to CARTO.

Finally, I created 5 additional tables for each of the species separately. These were later used to create map layers that show each species’ individual distribution.

Step 4: Uploading the datasets to CARTO

After creating a free student account with CARTO, I uploaded the six datasets as excel files. Once uploaded, I had to change the “Observation Date” column from a “string” to “date” data type for each dataset. A “date” data type is required for the time-series animation to run.

Step 5: Geocoding datasets

Each dataset added to the map as a layer had to be geocoded. Using the latitude and longitude columns previously added to the Excel file, I geocoded each of the five species’ layers.

Step 6: Create time-series widget to display temporal distribution of all species

After creating a blank map, I added the Excel file that included all the invasive species data as a layer. I then added a Time-Series Widget to allow for the temporal animation. I then selected Observation Date as the column to be displayed, meaning that the point data will be organized by observation date. I chose to organize the buckets, or groupings, for the corresponding time-slider by year.

Since “cumulative” was not an option for the Time-Series layer, I had to use CARTCSS to edit the code for the aggregation style. Changing the style from “linear” to “cumulative” allowed the points to remain on the screen for the duration of the animation, letting the user see the entire species’ range in the province. The updated CSS code can be seen in the screenshots below.

Step 7: Creating five additional layers for each species’ range

Since I could only add one Time-Series Widget per map, and the layer with the animation looks cluttered at some extents, I decided to create five additional layers that show each of the species’ individual observation data and range.

Step 8: Customizing layer styles

After adding all of the layers, a colour scheme was selected where each of the species’ was represented by a different colour to clearly differentiate between them. Colours that are generally associated with the species were selected. For example, the colour purple was selected to represent Purple Loosestrife, which is a purple flowering plant. The “multiply” style option was selected, meaning that areas with more or overlapping occurrences of invasive species are a darker shade of the selected colour.

A layer selector was included in the legend so that users can turn layers on or off. This allows them to clearly see one species’ distribution at a time.

Step 9: Publish map

Once all of the layers were configured correctly, the map was published so it could be seen by the public.